Okay, so today I’m gonna walk you through what I did with my “tiantian sun statistics” project. It’s basically a little playground I set up to mess around with some data analysis and visualization techniques. Nothing crazy, just a fun little project to keep my skills sharp.

First things first, I grabbed some data. I found this public dataset online, it was CSV file. I downloaded the CSV and put it into my project directory. Next up, I fired up my Jupyter Notebook. I like using Jupyter because it lets me write code and see the results right away. Super handy for exploring data.

Inside the notebook, I started by importing the libraries I knew I’d need. Pandas for data manipulation, Matplotlib and Seaborn for plotting. I then used Pandas to read the CSV file into a DataFrame. I then printed out the first few rows to get a feel for what the data looked like, column names, data types, the whole nine yards.

Then came the fun part, the data cleaning! The dataset had some missing values, so I decided to fill them with the mean of their respective columns. Not always the best approach, but it was good enough for this little experiment. I also noticed some weird outliers in one of the columns, so I clipped those to a reasonable range. Again, not super rigorous, but I was just trying to get the data into a usable state.

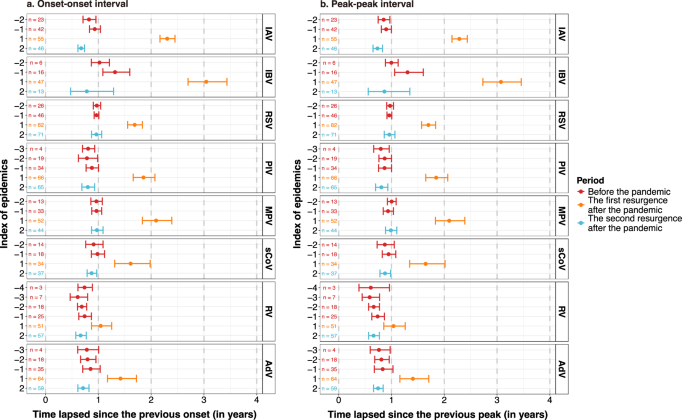

Once the data was clean, I started exploring it. I used Matplotlib and Seaborn to create a bunch of different plots. Histograms to see the distributions of the individual columns, scatter plots to look for relationships between pairs of columns, and box plots to compare different groups. I also calculated some summary statistics like the mean, median, and standard deviation for each column.

I tried out some simple regression models using scikit-learn. I split the data into training and testing sets, trained a linear regression model, and then evaluated its performance on the testing set. The results weren’t amazing, but it was a good exercise in applying machine learning techniques to real-world data.

Finally, I saved all my code and plots into a Git repository on Github. I also wrote a short README file describing the project and how to run it. That way, anyone can check it out and reproduce my results.

Learned a couple of things from this project. Data cleaning is always a pain, but it’s essential. Visualization is super helpful for understanding your data. I will continue to mess around with the dataset.