Okay, so yesterday I was messing around with some tennis data, trying to see if I could predict the outcome of the Bergs vs. Musetti match. Figured I’d share what I did, ’cause why not?

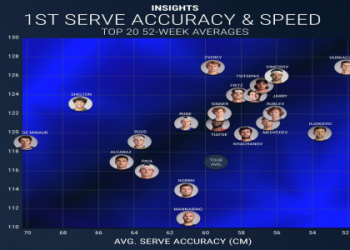

First things first, data collection. I scraped match stats from a couple of different tennis websites. You know, aces, double faults, first serve percentage, all that jazz. I also grabbed some historical data on both players – their past performance on different surfaces, their rankings, win-loss records. Basically, anything I could get my hands on that might be relevant.

Next up, data cleaning. This was a pain. Different websites format things differently, so I had to wrangle the data into a consistent format. Missing values everywhere! I ended up imputing some of them using the average values for similar players and match conditions. It’s not perfect, but it’s better than just throwing the data point away.

Feature engineering time! This is where things got a little more interesting. I calculated some new features, like the difference in their ranking points, their recent form (average performance over the last 5 matches), and their head-to-head record. I thought these might give me a better signal than just the raw stats.

Then, model selection. I wanted something relatively simple, so I started with a logistic regression model. I also tried a random forest model, just to see if it would perform any better. I used scikit-learn in Python, of course. It’s my go-to for this kind of stuff.

Training and evaluation. I split the data into training and testing sets. Trained both models on the training data, and then evaluated their performance on the testing data. I used accuracy and F1-score as my evaluation metrics. Nothing fancy.

And the result? Honestly, not great. Both models performed only slightly better than chance. The random forest model was a little bit better than the logistic regression, but not by much. I think the biggest problem is the limited amount of data. Tennis matches can be really variable, and you need a lot of data to train a reliable predictive model.

- I tried tweaking the features, adding more data, and trying different models. But, at the end of the day, the accuracy was still low.

- I did learn a lot about data scraping and feature engineering.

Final thoughts. Predicting tennis matches is harder than it looks! It’s not as simple as feeding data into a model. I need better data, maybe incorporate some weather data or even psychological factors. It was still a fun little project. Maybe I’ll try again with the next grand slam.